Video-based human motion capture has been a very active research area for decades now. The articulated structure of the human body, occlusions, partial observations and image ambiguities makes it very hard to accurately track the high number of degrees of freedom of the human pose. Recent approaches have shown, that adding sparse orientation cues from Inertial Measurement Units (IMUs) helps to disambiguate and improves full-body human motion capture. As a complementary data source, inertial sensors allow for accurate estimation of limb orientations even under fast motions.

In the research landscape of marker-less motion capture, publicly available benchmarks for video-based trackers (e.g. HumanEva, Human3.6M) generally lack inertial data. One exception is the

MPI08 dataset, which provides inertial data of 5 IMUs along with video data.

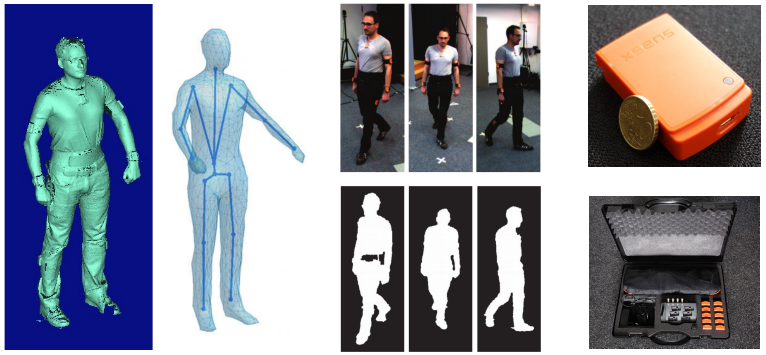

This new dataset, called TNT15, consists of synchronized data streams from 8 RGB-cameras and 10 IMUs. In contrast to MPI08 it has been recorded in a normal office room environment and the high number of 10 IMUs can be used for new tracking approaches or improved evaluation purposes.